Tidbits¶

Weighting tricks¶

Combining weighted simulation¶

dCORSIKA¶

There are times when it is useful to combine weighted Corsika simulation from runs with different generation spectra. Calculating the appropriate weights for combined sets turns out to be remarkably simple, provided that one understands how the weighting works. The simplest form of an importance-sampling weight (in units of 1/time) for a cosmic-ray primary of energy \(E\) is:

where \(A_{total}\) is the integrated area-times-solid-angle of the sampling surface, \(N\) is the number of generated events, \(\Phi_{target}\) is flux one wishes to weight to, and \(p_{gen}\) is the probability of drawing the given \(E\) from the generation spectrum. For a power-law generation spectrum between energies \(E_0\) and \(E_1\) the generation probability is just:

The extension to multiple primaries, or multiple samples with different generation spectra, is straightforward. Instead of normalizing to a single generation spectrum \(N p_{gen}(E)\), one normalizes to a combined distribution that is a sum of the generation spectra, weighted by the number of entries drawn from each:

So the weight for events from the combined sample is:

The only technical difference to the single-set case is that the combined generation probability \(\hat{p}_{gen}(E,Z)\) is defined piecewise.

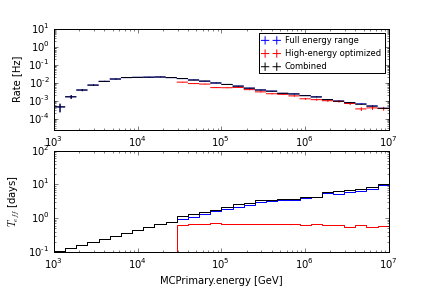

A demonstration of combined weights for different generation spectra. The “Full energy range” sample was generated from an \(E^{-2}\) spectrum across the full range of energies that trigger IceCube, while the “High-energy optimized” sample was generated from a more natural \(E^{-2.6}\) spectrum with a high energy treshold. While the combined histogram closely follows that of the (larger) full-range sample, the effective livetime plot shows how the addition of the high-threshold sample begins to reduce the variance of the combined sample above its threshold.

| Expression | Key in CorsikaWeightMap |

|---|---|

| \(A_{total}\) | AreaSum |

| \(r_{gen}\) | CylinderRadius |

| \(l_{gen}\) | CylinderLength |

| \(-\gamma\) | PrimarySpectralIndex |

| \(E_0\) | EnergyPrimaryMin |

| \(E_1\) | EnergyPrimaryMax |

| \(\int_{E_{0}}^{E_{1}} E^{-\gamma} dE\) | FluxSum |

| \(N_{i,Z}\) | NEvents (times NFiles) |

Aside: unweighted Hörandel¶

In RANPRI=2 mode, dCORSIKA generates primaries through iron from the parametrization of Hörandel (2003). Just as with power-law generated spectra, these sets can be combined with weighted sets given knowledge of \(N p_{gen}(E,Z)\). The generated flux is of the form:

The knee term makes the calculation of the normalization of the generation PDF somewhat tricky. As it turns out, the antiderivative involves a Gauss hypergeometric function:

The biasing mode of dCORSIKA (DSLOPE != 0) simply adds a constant factor to each \(\gamma_Z\); the knee simulated via acceptance-rejection sampling just as in the unbiased mode.

NeutrinoGenerator¶

The same trick works for neutrino-generator data, with a few names changed. Here the weight is of the form

where \(A \equiv \pi r_{gen}^2 / {\rm cm}\) is the total area of neutrino-generator’s injection surface, \(\Omega \equiv (\cos{\theta_1}-\cos{\theta_0})(\phi_1-\phi_0) / {\rm sr}\) is the solid angle over which events were generated, and \(p_{int}\) is the probability that the neutrino would have interacted at the point in the detector where it was forced to. Just as before, the weight for combined samples with different generation spectra is:

In contrast to Corsika weights, the multi-sample case for neutrino-generator data is slightly more annoying to implement, as one has to calculate \(A\), \(\Omega\), and \(\int_{E_{0}}^{E_{1}} E^{-\gamma} dE\) after the fact instread of taking the pre-packaged OneWeight.

| Expression | Key in I3MCWeightDict |

|---|---|

| \(r_{gen}\) | InjectionSurfaceR |

| \(l_{gen}\) | TotalDetectionLength |

| \(p_{int}\) | TotalInteractionProbabilityWeight |

| \(\gamma\) | PowerLawIndex |

| \(\log_{10}{E_0}\), \(\log_{10}{E_1}\) | MinEnergyLog, MaxEnergyLog |

| \(\theta_0\), \(\theta_1\) | MinZenith, MaxZenith |

| \(\phi_0\), \(\phi_1\) | MinAzimuth, MaxAzimuth |

| \(p_{int} \frac{A \Omega}{p_{gen}(E)}\) | OneWeight |

Effective areas¶

Note

I know that everyone knows how to do this; this section is more for my own benefit than anyone else’s.

NeutrinoGenerator¶

NeutrinoGenerator’s simulation volume is a cylinder of a fixed radius \(r_{gen}\) whose length varies for each injected neutrino energy to accomodate the range of possible muons produced in the interaction. Neutrino energies are drawn from a fixed power law distribution and forced to interact at a random distance along the cylinder chosen from a uniform distribution, and the probability of that forced interaction is stored as a weight \(p_{int}\). These factors can be combined to yield the commonly-used OneWeight:

where energy unit comes from the fact that the generation probability is in units of 1/energy. The weights of events surviving some selection can be used to express a detection efficiency in a given neutrino energy range in units of area:

where \(\Delta E\) is a range of energies being summed over. If the interaction probability were exactly one, the ratio of the effective area to the injection area would simply be a measure of the cut efficiency; the interaction probability folds in the effects of the neutrino-nucleon cross-section. Intuitively, the effective area is the area of an equivalent perfectly-absorbing neutrino detector. For point-like events (starting muons, cascades), it also makes sense to define an effective volume. Instead of counting up weighted contributions to the injection area, we can instead count up weighted contributions to the simulation volume, and define:

where \(l_{gen}\) length of the generation cylinder. If one wants to ignore the probability of seeing a neutrino in the first place and simply express an effective selection volume, one can omit the interaction probability to define a fiducial volume:

dCORSIKA¶

An analogous effective area can be defined for dCORSIKA simulation, with the simplification that forced interactions are unnecessary: